In their article Nine Ways to Reduce Cognitive Load in Multimedia Learning, Richard Mayer and Roxana Moreno explore how we present information and how much we present at a time can affect learner's ability to absorb and process the new information.

Mayer and Moreno begin by defining multimedia learning ("learning from words and pictures") and multimedia instruction ("presenting words and pictures that are intended to foster learning") (p. 1). Furthermore, they explain that they are looking for not just learning but meaningful learning, where learners are able to demonstrate and understanding of the information by being able to apply it beyond the learning environment. (As opposed to learning it long enough to past a test, and then not really being able to do anything useful with it.)

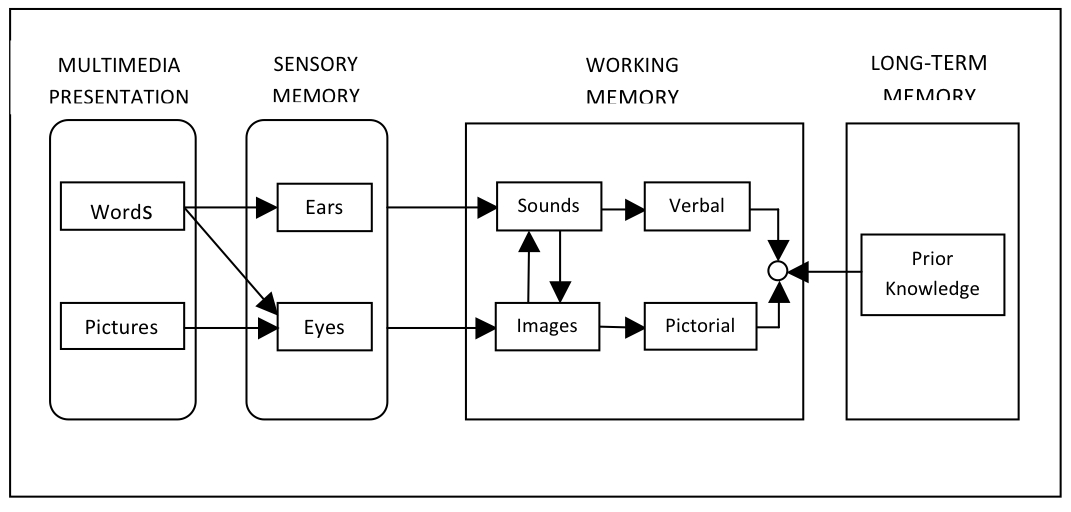

Mayer and Moreno have three assumption about the brain and learning:

1. Dual assumption - people process information audibly/verbally and/or visually. A person can process the two things concurrently to one another, but not simultaneously (on the same channel in the mind).

2. Limited capacity assumption - while learning for most people is exponential, the amount of new information someone can meaningful process as a given time is limited.

3. Active procession assumption - it is a heavier cognitive load to process something visually (reading subtitles) and audibly (listening) than just processing one channel at a time.

It is based on these assumptions that they came up with the Cognitive theory of multimedia learning shown here.

Mayer and Moreno assert that you can encourage and increase meaningful learning by being mindful of the channels you require your students to use when designing your multimedia materials. They provide 5 case studies where the cognitive load was overloading learner capacity for meaningful learning. For each case, they offer one or multiple solutions for how to lighten the cognitive load by focusing on incorporating essential processing (the required elements for making the material understandable), weed out incidental processing (extra unnecessary features such as adding music, animated gifs, etc), and being mindful of representational holding (provide connect materials together when possible, to lesson the time the learner has to spend going back to find answers in your materials).

Summary

This was actually my favorite article of the class so far. All of the examples are realistic and the solutions are simple and reasonable. I think many of us could brainstorm and come up with the same or similar solutions in many of the cases, however, we didn't have names (effects) to describe why we would make the change. Also, I find that it is easier to review and find the cognitive overload problem areas in other people's design than in my own. I think creating a checklist of sorts of these effects as a cheat-sheet to double check my designs would be useful.

I will point out though that I don't feel these rules are absolutes when it comes to language learning through multimedia learning. Let me give an example:

In developing language learning materials, I will often see developers use a video and then add subtitles and call it a "listening exercise". If the subtitles are in the target language, I say "No, this is now a reading exercise, because your students aren't being forced to negotiate meaning through listening (a harder skill than reading) because they'll be reading." If the subtitles are in English, I say "No, this is now a cultural exercise, because your because your students aren't being forced to negotiate understanding through the target language." What I encourage instructors/developers to do (for creating a listening activity) is to not use subtitling (word for word) in either language, but instead to only caption (popping up a word or short phrase) at the bottom of the screen only for some words. Examples I give of relevant reasons to caption: 1. salient terms, 2. pointing out new grammar item, 3. emphasizing new vocabulary word, 4. help with meaning (in language learning we say that your goal is to present material that is i+1, which is *just* above their level of understanding, to push them to grow. However, if you go above that, then the learner won't learn because they become too frustrated and shut down.). In this case, we are using the multiple modality to draw attention to a new/important/difficult item in order to help the learner.